Agreement means the terms and conditions for. Llama 2 is also available under a permissive commercial license whereas Llama 1 was limited to non-commercial use Llama 2 is capable of processing. Metas license for the LLaMa models and code does not meet this standard Specifically it puts restrictions on commercial use for. Quick setup and how-to guide Getting started with Llama Welcome to the getting started guide for Llama. Llama-v2 is open source with a license that authorizes commercial use This is going to change the landscape of the LLM..

Meta Releases Llama 2 Generative Ai Model Challenging Openai And Google With Free Commercial Licensing Voicebot Ai

LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM. Opt for a machine with a high-end GPU like NVIDIAs latest RTX 3090 or RTX 4090 or dual GPU setup to accommodate the largest models 65B and 70B. We target 24 GB of VRAM If you use Google Colab you cannot run it on. 381 tokens per second - llama-2-13b-chatggmlv3q8_0bin CPU only 224 tokens per second - llama-2-70b. 1 Backround I would like to run a 70B LLama 2 instance locally not train just run Quantized to 4 bits this is roughly 35GB on HF its actually as..

Our fine-tuned LLMs called Llama 2-Chat are optimized for dialogue use cases Our models outperform open-source chat models on most benchmarks we tested and based on our human. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a. The process as introduced above involves the supervised fine-tuning step using QLoRA on the 7B Llama v2 model on the SFT split of the data via TRLs SFTTrainer. We successfully fine-tuned 70B Llama model using PyTorch FSDP in a multi-node multi-gpu setting while addressing various challenges We saw how Transformers and. The tutorial provided a comprehensive guide on fine-tuning the LLaMA 2 model using techniques like QLoRA PEFT and SFT to overcome memory and compute limitations..

Meta And Microsoft Release Llama 2 Free For Commercial Use And Research

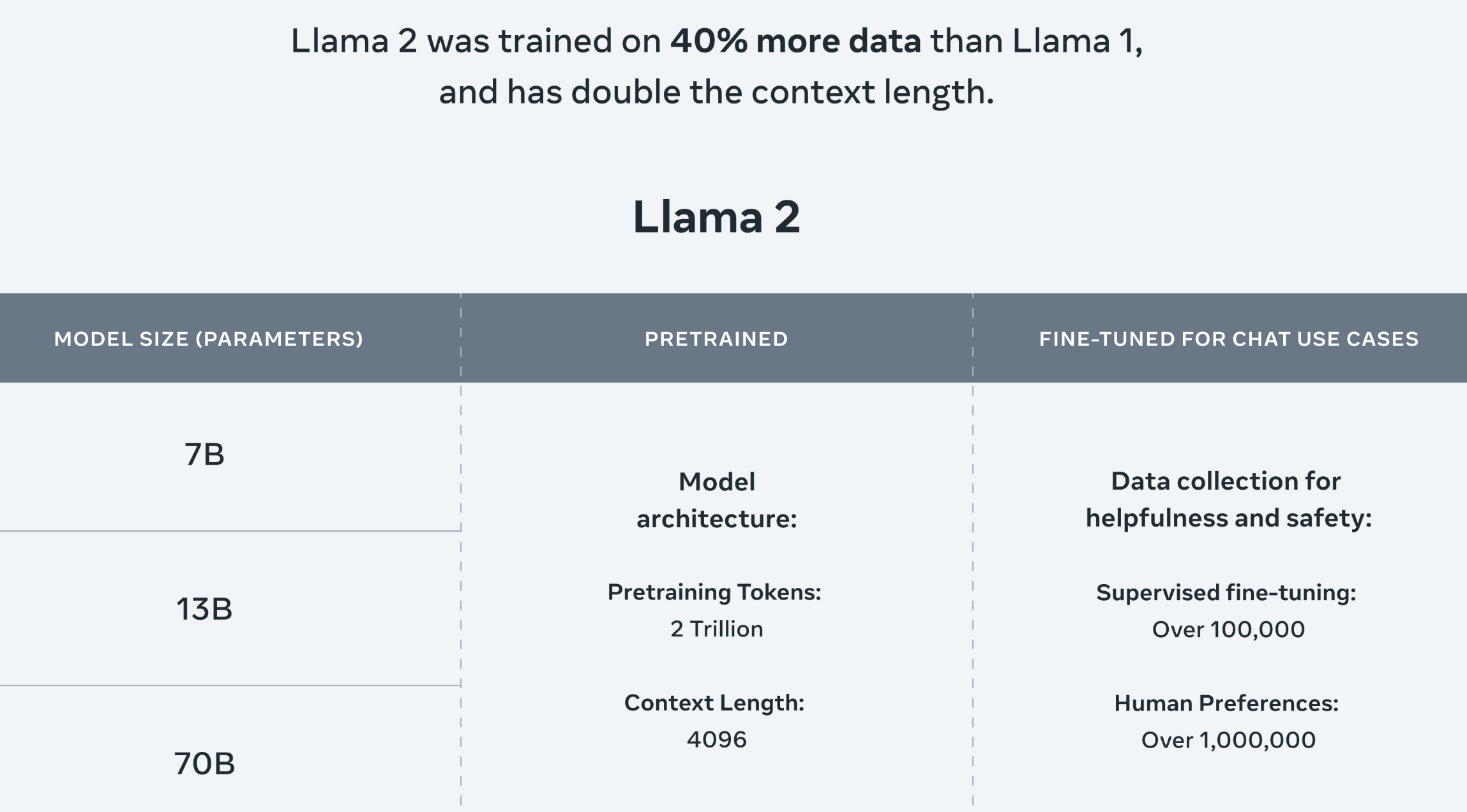

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion. In this part we will learn about all the steps required to fine. We explored quantizing a model with GGUF and llamacpp in this article We first looked at the benefits of model. The 7 billion parameter version of Llama 2 weighs 135 GB After 4-bit quantization with GPTQ its size. We conducted benchmarks on both Llama-27B-chat and Llama-213B-chat models utilizing with 4. This release includes model weights and starting code for pretrained and fine-tuned Llama..

Komentar